AI models help to analyze data to study different patterns and make predictions. It requires a large amount of data to make their algorithms function effectively. AI is the most revolutionary technology in this cutting-edge era in multiple aspects. It helps make predictions, automate tedious tasks, generate content, create images by just giving a prompt, and whatnot!

AI is now at an extensive level to promote easiness in complex organizational tasks, saving lots of time and reducing costs. AI models effectively help businesses to be compatible, lucrative, and competitive by empowering them with strong predictions for future betterment.

Moreover, AI models play a vital role in making AI-powered software capable of thinking, speaking, taking actions, analyzing, evaluating, etc. Let’s look at how it works and what are the major AI models that make software smart and effective.

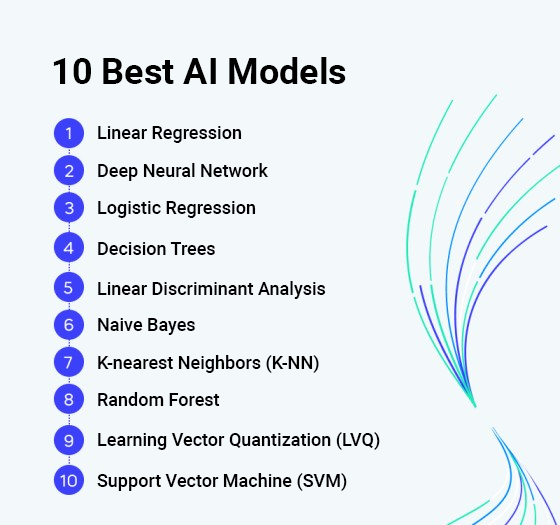

10 Major AI Models To Integrate With Your AI Software

AI has a broad spectrum associated with powerful subsets, including deep learning, machine learning, expert systems, and more. There are 10 exceptional AI models that make machines learn by experience and predict data with deep insights.

Linear Regression

Using linear regression is to build a relationship between independent and dependent variables of given data to learn through different patterns. It operates in the stats-based algorithm where other variables are associated and have dependencies. This is widely used in developing banking, insurance, healthcare solutions, etc.

A simple linear regression involves a single independent variable and finds a linear function that predicts the dependent variable as accurately as possible. In multiple linear regression, several independent variables predict the outcome. However, its simplicity can also be a limitation: it assumes a linear relationship between variables. It is sensitive to outliers, which can lead to misleading results if these assumptions aren’t met.

Deep Neural Network

It is one of the most mesmerizing AI models built by inspiring human brain neurons. The deep neural network has multiple layers of input and output based on interconnected units known as artificial neurons. The main focus of deep neural networks is to support the development of speech recognition, image recognition, and NLP.

One of the main challenges with DNNs is avoiding overfitting, where the network becomes too tailored to the training data and performs poorly on new, unseen data. Despite these challenges, the versatility and power of DNNs make them a cornerstone of modern machine learning and AI applications.

Logistic Regression

Logistic regression is a statistical method used for binary classification. It predicts the probability of a binary outcome (such as yes/no, win/lose, alive/dead) based on one or more predictor variables. Unlike linear regression, which predicts a continuous outcome, logistic regression models the probability of a certain class or event existing. For example, the probability of a team winning a game or a patient having a particular disease.

This is achieved by using the logistic function, which ensures that the model’s output is between 0 and 1, representing the probability of the outcome. The model coefficients are typically estimated using maximum likelihood estimation. Logistic regression is widely used in various fields like medical research, marketing, and social sciences for its ability to provide probabilities and classify data into categories.

Decision Trees

Decision Trees are a type of supervised machine-learning algorithm used for both classification and regression tasks. They work by splitting the data into subsets based on the value of input features. It essentially breaks down a complex decision-making process into a series of simpler decisions, hence forming a tree-like structure.

Each internal node of the tree represents a test on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label or a continuous value. The paths from root to leaf represent classification rules or regression conditions. Decision Trees are popular due to their ease of interpretation and understanding, as they closely mirror human decision-making processes. However, they can be prone to overfitting, especially with complex data and without proper pruning or limitations on tree depth.

[Nasir-hu-mein heading=”Endless AI Model Capabilities” para=”We are capable enough to deal with all AI models and integrate seamlessly into software applications to make them more efficient and compatible.”]Linear Discriminant Analysis

Linear Discriminant Analysis is a statistical machine learning technique for classification and dimensionality reduction. It focuses on finding a linear combination of features that best separates two or more classes of objects or events. The basic idea is to project the data onto a lower-dimensional space with good class separability to avoid overfitting and reduce computational costs.

LDA assumes that the different classes have the same covariance matrix and that the data is normally distributed. It’s particularly useful when the within-class frequencies are unequal, and their performances have been proven quite robust in such scenarios. LDA is often used in pattern recognition, medical diagnosis, and machine learning applications where linear separability is key.

Naive Bayes

Naive Bayes is a simple yet powerful probabilistic classifier that applies Bayes’ theorem with strong (naive) independence assumptions between the features. It is particularly for large datasets and performs surprisingly well on text classification problems, such as spam filtering and sentiment analysis. Despite its simplicity, Naive Bayes can outperform more sophisticated classification methods.

The ‘naive’ aspect of the algorithm comes from the assumption that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature, given the class variable. This assumption simplifies the computation, and although it’s a strong assumption, Naive Bayes works well in many complex real-world situations.

K-nearest Neighbors (K-NN)

K-nearest Neighbors is a simple yet effective non-parametric classification (and regression) algorithm. It operates on a very straightforward principle: it finds the ‘k’ closest data points (neighbors) in the training dataset for a given data point. It assigns the most frequent label (in classification) or the average/median outcome (in regression) of these neighbors to the new point.

The number ‘k’ is a user-defined constant, and the distance is typically calculated using methods like Euclidean or Manhattan distance. K-NN is especially popular due to its ease of understanding and implementation, and it often performs well in scenarios where the decision boundary is very irregular. However, it can become computationally intensive as the size of the data increases and can perform poorly with high-dimensional data due to the curse of dimensionality. K-NN also requires appropriate feature scaling for good performance and is sensitive to noisy data.

Random Forest

Random Forest is an ensemble learning technique for classification and regression tasks. It builds upon the concept of decision trees. Still, instead of relying on a single decision tree, it constructs many trees at training time. It outputs the mode of the classes (for classification) or mean prediction (for regression) of the individual trees. Random Forest introduces randomness in two ways:

- By randomly sampling the data points (with replacement) to train each tree (bootstrap aggregating or bagging).

- By randomly selecting a subset of features at each split point of the trees.

This randomness helps create a diverse set of trees, making the Random Forest more robust and accurate than individual decision trees. It is particularly by reducing overfitting. It also handles large datasets with higher dimensionality well and automatically takes missing values. However, Random Forest models can be complex and require significant computational resources. They are not as easily interpretable as individual decision trees.

Learning Vector Quantization (LVQ)

Learning Vector Quantization (LVQ) is a prototype-based, supervised learning algorithm often used for classification tasks. It operates by initially setting up a set of prototype vectors and iteratively adjusting them to represent better the distribution of the different classes in the training data. During the training process, the algorithm compares training examples to these prototypes. If a training example is incorrectly classified, the closest but incorrect class prototype is moved away from that example, and the prototype of the correct class is moved closer.

This process continues until the prototypes stabilize. LVQ is particularly valued for its simplicity and effectiveness in scenarios where key prototypes can represent data, but it can be sensitive to the initial placement of the prototypes and the choice of learning rate.

Support Vector Machine (SVM)

It is a versatile supervised learning algorithm widely used for classification and regression tasks. It finds the optimal hyperplane or set of hyperplanes in a high-dimensional space that best separates different classes with the maximum margin. In simpler terms, SVM seeks the best decision boundary that stands farthest from the nearest data points of any class, known as support vectors.

For non-linearly separable data, SVM employs the kernel trick to map the data into a higher-dimensional space where a linear separation is possible. Key strengths of SVM include its effectiveness in high-dimensional spaces and its versatility in handling both linear and non-linear data. However, it can be computationally intensive and requires careful parameter tuning, particularly in the choice of the kernel function.

Read more: Exploring Enterprise Generative AI Models – Revolutionizing Business Processes

Ready To Experience Smart AI Models?

Implementing AI models can increase the credibility of your software, but it can only happen if you do it correctly. Having professional AI engineers is a must to initiate AI-based projects. At MMCGBL, we own experienced AI engineers who can help you significantly integrate multiple AI models to make your software functional.

We expect more innovation in AI and are ready to take over those capabilities in the future. One of this year’s biggest achievements is to complete 30+ AI-based projects, which is proof of our capabilities. Let’s join hands with us!